sqoop - sqoop import to hive - apache sqoop - sqoop tutorial - sqoop hadoop

sqoop import to hive

$ sqoop <<tool-name>> \

{generic- arguments} \

{hive-arguments}Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

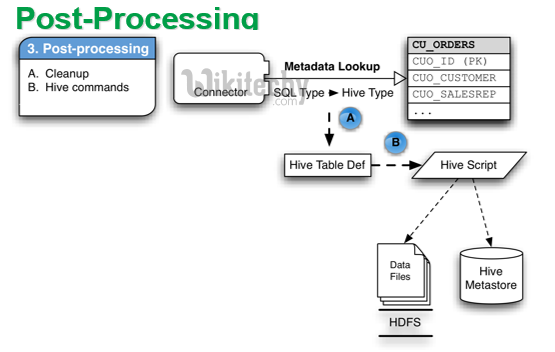

- The create-hive-table tool populates a Hive metastore with a definition for a table based on a database table previously imported to HDFS, or one planned to be imported.

$ sqoop create-hive-table (generic-args) (create-hive-table-args)

$ sqoop-create-hive-table (generic-args) (create-hive-table-args)Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

Example:

$ sqoop create-hive-table \

--connect “jdbc:mysql://localhost/<<DB-Name>>” \

--username root –P \

--table << Table-Name >> \

--hive-table <<DB-Name>>.<<Table-Name>>Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

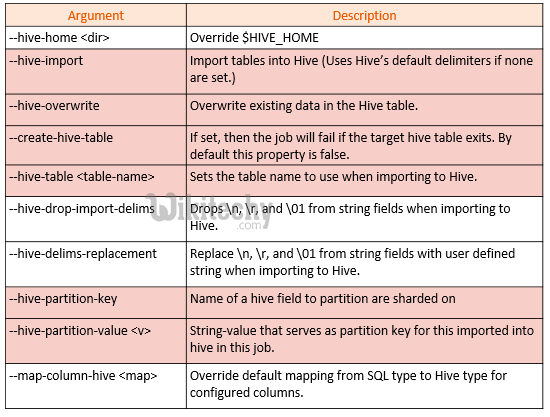

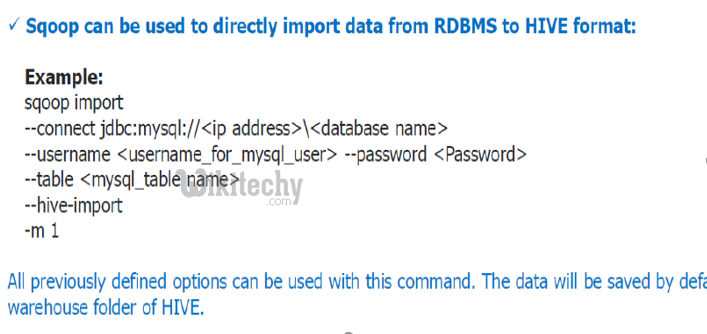

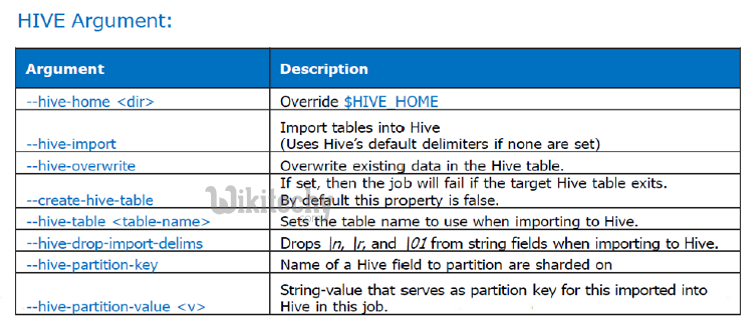

- If you want to move your data directly from structure data store to hive warehouse you can use –hive-import.

- If table already exist in the database & you want to overwrite its content then use –hive-overwrite.

- If table definition does not exists in hive warehouse then use --create-hive-table.

$ sqoop import \

--connect “jdbc:mysql://localhost/classicmodels”

--username root –P \

--table employees \

--target-dir /usr/hive/warehouse/<<db-name>>.db \

--fields-terminated-by "," \

--hive-import \

--create-hive-table \

--hive-table <<DB-Name>>.<<Hive-table>> \

Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

Important points to note down on sqoop

sqoop import \

--connect jdbc:mysql://localhost/classicmodels \

--username root -P \

--table employees \

--columns <<column-name>>\

--Where "<<condition>>'" \

--create-hive-table \

--hive-import \

--hive-table employees \

--hive-partition-key “<<conditioned-column-name>>" \

--hive-partition-value "<<condition-column-value>>"

Click "Copy code" button to copy into clipboard - By wikitechy - sqoop tutorial - team

learn sqoop - sqoop tutorial - sqoop2 tutorial - sqoop import to hive - sqoop code - sqoop programming - sqoop download - sqoop examples