sqoop - What is Sqoop - apache sqoop - sqoop tutorial - sqoop hadoop

Sqoop

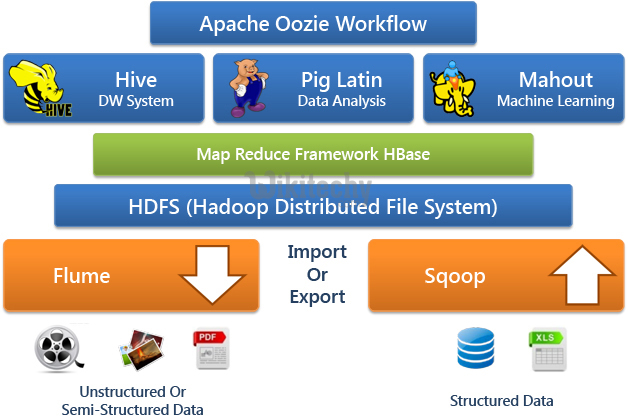

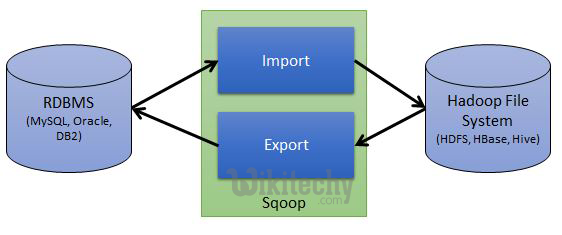

- Sqoop is a command-line interface application for transferring data between relational databases and Hadoop.

- It supports incremental loads of a single table or a free form SQL query as well as saved jobs which can be run multiple times to import updates made to a database since the last import.

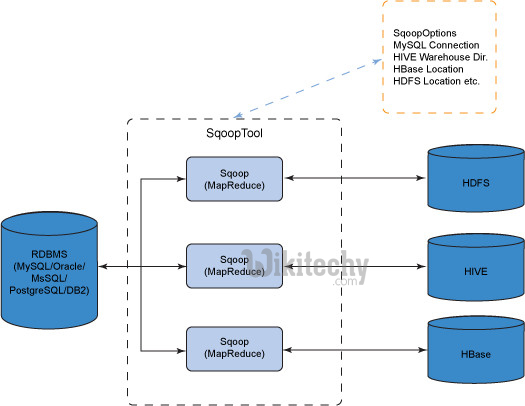

- Using Sqoop, Data can be moved into HDFS/hive/hbase from MySQL/ PostgreSQL/Oracle/SQL Server/DB2 and vise versa.

Learn sqoop - sqoop tutorial - data sharing in sqoop - sqoop examples - sqoop programs

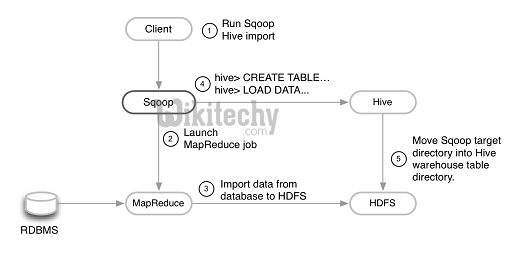

Sqoop Working

Step 1:

- Sqoop send the request to Relational DB to send the return the metadata information about the table(Metadata here is the data about the table in relational DB).

Step 2:

- From the received information it will generate the java classes (Reason why you should have Java configured before get it working-Sqoop internally uses JDBC API to generate data).

Step 3:

- Now Sqoop (As its written in java tries to package the compiled classes to be able to generate table structure) , post compiling creates jar file(Java packaging standard).

Sqoop related tags : sqoop import , sqoop interview questions , sqoop export , sqoop commands , sqoop user guide , sqoop documentation

WHAT SQOOP DOES

- Apache Sqoop does the following to integrate bulk data movement between Hadoop and structured datastores:

| Function | Benefit |

|---|---|

| Import sequential datasets from mainframe | Satisfies the growing need to move data from mainframe |

| Import direct to ORCFiles | Improved compression and light-weight indexing for improved |

| Data imports | Moves certain data from external stores and EDWs into Hadoop to optimize cost-effectiveness of combined data storage and processing |

| Parallel data transfer | For faster performance and optimal system utilization |

| Fast data copies | From external systems into Hadoop |

| Efficient data analysis | Improves efficiency of data analysis by combining structured data with unstructured data in a schema-on-read data lake |

| Load balancing | Mitigates excessive storage and processing loads to other |

- YARN coordinates data ingest from Apache Sqoop and other services that deliver data into the Enterprise Hadoop cluster.