apache hive - Hive user defined functions - user defined types - user defined data formats- hive tutorial - hadoop hive - hadoop hive - hiveql

apache hive related article tags - hive tutorial - hadoop hive - hadoop hive - hiveql - hive hadoop - learnhive - hive sql

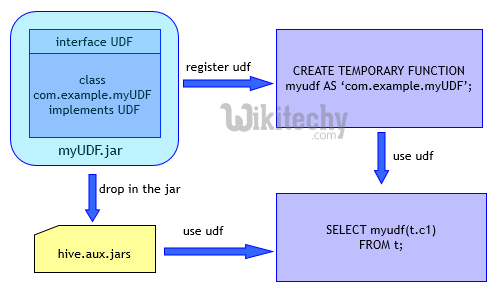

Hive User Defined Functions :

- User Defined Function

-------One-to-one row mapping

-------Concat(‘foo’, ‘bar’)

- User Defined Aggregate Function -------Many-to-one row mapping

-------Sum(num_ads)

- User Defined Table Function -------One-to-many row mapping

-------Explode([1,2,3])

learn hive - hive tutorial - apache hive - hive user defined functions - hive examples

Hive User Defined Data Formats :

learn hive - hive tutorial - apache hive - hive user defined data formats - hive examples

TABLE GENERATING FUNCTION EXAMPLE

CREATE TABLE arrays (x ARRAY

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\001'

COLLECTION ITEMS TERMINATED BY '\002';

a^B

b^B

c^B

d^B

e^B

hive> SELECT * FROM arrays;

[ "a", "b"]

["c", "d", "e"]

hive> SELECT explode(x) AS y FROM arrays;

a

b

c

d

e

package com.hadoopbook.hive

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.TEXT;

public class Strip extends UDF {

private Text result = new Text();

public Text evaluate(Text str) {

if(str == null) {

return null; }

result.set(StringUtils.strip(str.toString()));

return result; }

public Text evaluate(Text str, String stripChars) {

if(str == null) {

return null;

}

result.set(StringUtils.strip(str.toString(), stripChars))

return result;

}

}

ADD JAR /path/to/hive/hive-example.jar;

CREATE TEMPORARY FUNCTION strip AS 'com.hadoopbook.hive.Strip';

hive> SELECT strip(' bee ') FROM dummy;

bee hive> SELECT strip ('banana' , 'ab') FROM dummy;

nan

IMPORTING DATA SETS

hive> CREATE TABLE SHOOTING (arhivesource string, text string, to_user_id string, from_user string, id string, from_user_id string , iso_language_code string, source string , profile_image_url string, geo_type string, geo_coordinates_0 double, geo_coordinates_1 double, created_at string, time int, month int, day int, year int) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',';

>hive LOAD DATA LOCAL INPATH '/dlrlhive/shooting/shooting.csv' INTO TABLE shooting;

apache hive related article tags - hive tutorial - hadoop hive - hadoop hive - hiveql - hive hadoop - learnhive - hive sql

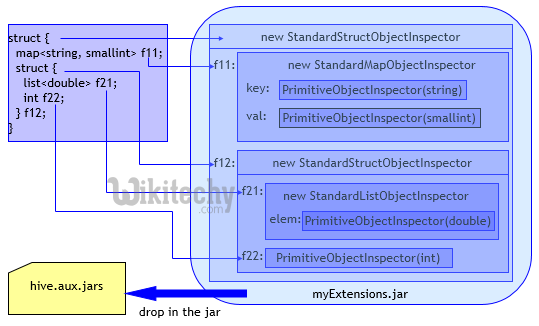

Hive User Defined Types :

learn hive - hive tutorial - apache hive - hive user defined types - hive examples

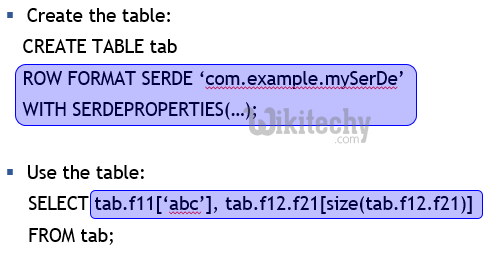

Hive Reading Rich Data :

learn hive - hive tutorial - apache hive - hive serde reading rich data - hive examples

- Easy to write a SerDe for old data stored in your own format

- Existing SerDe families

---------------- Thrift DDL based SerDe

---------------- Delimited text based SerDe

---------------- Dynamic SerDe to read data with delimited maps, lists and primitive types

---------------- You can write your own SerDe (XML, JSON …)

apache hive related article tags - hive tutorial - hadoop hive - hadoop hive - hiveql - hive hadoop - learnhive - hive sql

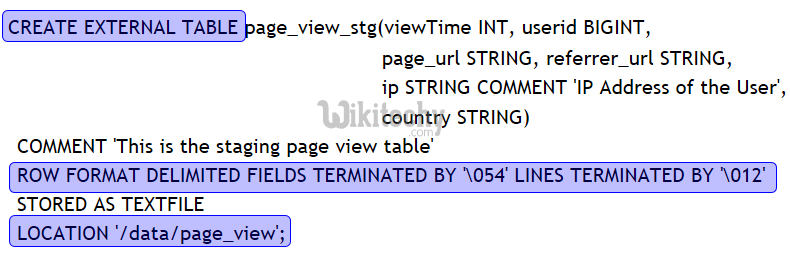

Hive Interoperability – External Tables :

learn hive - hive tutorial - apache hive - hive hdfs external table - hive examples

apache hive related article tags - hive tutorial - hadoop hive - hadoop hive - hiveql - hive hadoop - learnhive - hive sql

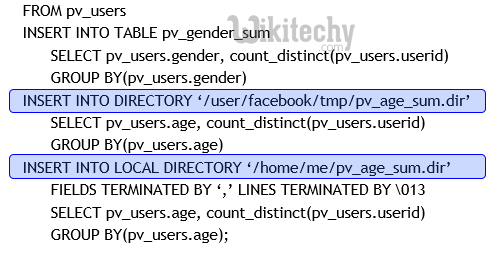

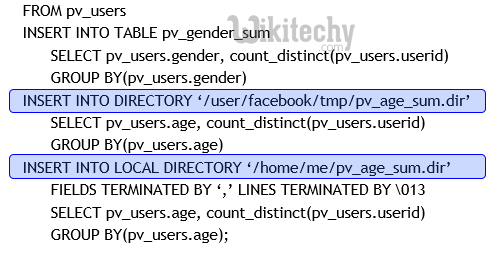

Hive - Insert into Files, Tables and Local Files :

learn hive - hive tutorial - apache hive - hive hdfs insert into table and insert into files - hive examples

apache hive related article tags - hive tutorial - hadoop hive - hadoop hive - hiveql - hive hadoop - learnhive - hive sql

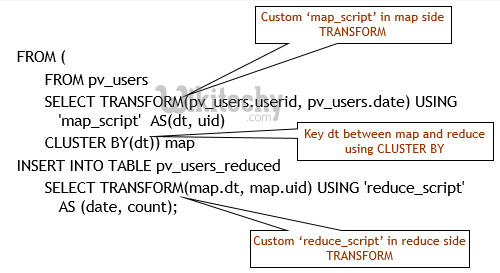

Hive - Extensibility - Custom Map/Reduce Scripts - User defined map reduce scripts :

learn hive - hive tutorial - apache hive - hive hdfs - Extensibility user defined Map Reduce code - hive examples