pig tutorial - apache pig tutorial - Apache Pig Cross Operator - pig latin - apache pig - pig hadoop

What is CROSS operator?

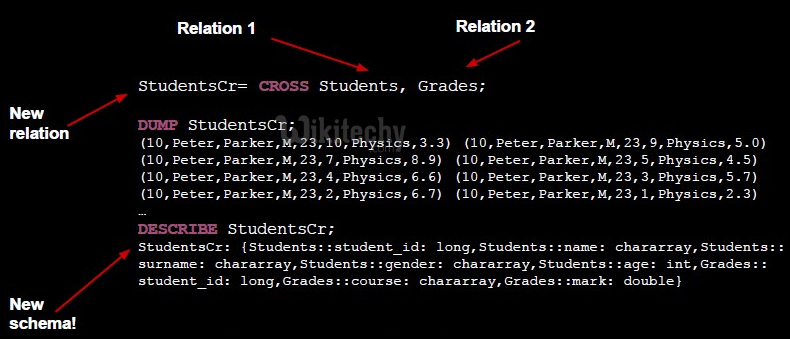

The CROSS operator computes the cross-product of two or more relations. This chapter explains with example how to use the cross operator in Pig Latin.

- Cartesian product of two or more relations

Syntax:

The syntax of the CROSS operator is

grunt> Relation3_name = CROSS Relation1_name, Relation2_name;

Example 1:

Assume that we have two files namely customers.txt and orders.txt in the /pig_data/ directory of HDFS as shown below.

customers.txt

1,Ramesh,32,Ahmedabad,2000.00

2,Khilan,25,Delhi,1500.00

3,kaushik,23,Kota,2000.00

4,Chaitali,25,Mumbai,6500.00

5,Hardik,27,Bhopal,8500.00

6,Komal,22,MP,4500.00

7,Muffy,24,Indore,10000.00

orders.txt

102,2009-10-08 00:00:00,3,3000

100,2009-10-08 00:00:00,3,1500

101,2009-11-20 00:00:00,2,1560

103,2008-05-20 00:00:00,4,2060

And we have loaded these two files into Pig with the relations customers and orders as shown below.

grunt> customers = LOAD 'hdfs://localhost:9000/pig_data/customers.txt' USING PigStorage(',')

as (id:int, name:chararray, age:int, address:chararray, salary:int);

grunt> orders = LOAD 'hdfs://localhost:9000/pig_data/orders.txt' USING PigStorage(',')

as (oid:int, date:chararray, customer_id:int, amount:int);

Now get the cross-product of these two relations using the cross operator on these two relations as shown below.

grunt> cross_data = CROSS customers, orders;

Verification:

Verify the relation cross_data using the DUMP operator as shown below.

grunt> Dump cross_data;

Output:

It will produce the following output, displaying the contents of the relation cross_data.

(7,Muffy,24,Indore,10000,103,2008-05-20 00:00:00,4,2060)

(7,Muffy,24,Indore,10000,101,2009-11-20 00:00:00,2,1560)

(7,Muffy,24,Indore,10000,100,2009-10-08 00:00:00,3,1500)

(7,Muffy,24,Indore,10000,102,2009-10-08 00:00:00,3,3000)

(6,Komal,22,MP,4500,103,2008-05-20 00:00:00,4,2060)

(6,Komal,22,MP,4500,101,2009-11-20 00:00:00,2,1560)

(6,Komal,22,MP,4500,100,2009-10-08 00:00:00,3,1500)

(6,Komal,22,MP,4500,102,2009-10-08 00:00:00,3,3000)

(5,Hardik,27,Bhopal,8500,103,2008-05-20 00:00:00,4,2060)

(5,Hardik,27,Bhopal,8500,101,2009-11-20 00:00:00,2,1560)

(5,Hardik,27,Bhopal,8500,100,2009-10-08 00:00:00,3,1500)

(5,Hardik,27,Bhopal,8500,102,2009-10-08 00:00:00,3,3000)

(4,Chaitali,25,Mumbai,6500,103,2008-05-20 00:00:00,4,2060)

(4,Chaitali,25,Mumbai,6500,101,2009-20 00:00:00,4,2060)

(2,Khilan,25,Delhi,1500,101,2009-11-20 00:00:00,2,1560)

(2,Khilan,25,Delhi,1500,100,2009-10-08 00:00:00,3,1500)

(2,Khilan,25,Delhi,1500,102,2009-10-08 00:00:00,3,3000)

(1,Ramesh,32,Ahmedabad,2000,103,2008-05-20 00:00:00,4,2060)

(1,Ramesh,32,Ahmedabad,2000,101,2009-11-20 00:00:00,2,1560)

(1,Ramesh,32,Ahmedabad,2000,100,2009-10-08 00:00:00,3,1500)

(1,Ramesh,32,Ahmedabad,2000,102,2009-10-08 00:00:00,3,3000)-11-20 00:00:00,2,1560)

(4,Chaitali,25,Mumbai,6500,100,2009-10-08 00:00:00,3,1500)

(4,Chaitali,25,Mumbai,6500,102,2009-10-08 00:00:00,3,3000)

(3,kaushik,23,Kota,2000,103,2008-05-20 00:00:00,4,2060)

(3,kaushik,23,Kota,2000,101,2009-11-20 00:00:00,2,1560)

(3,kaushik,23,Kota,2000,100,2009-10-08 00:00:00,3,1500)

(3,kaushik,23,Kota,2000,102,2009-10-08 00:00:00,3,3000)

(2,Khilan,25,Delhi,1500,103,2008-05-20 00:00:00,4,2060)

(2,Khilan,25,Delhi,1500,101,2009-11-20 00:00:00,2,1560)

(2,Khilan,25,Delhi,1500,100,2009-10-08 00:00:00,3,1500)

(2,Khilan,25,Delhi,1500,102,2009-10-08 00:00:00,3,3000)

(1,Ramesh,32,Ahmedabad,2000,103,2008-05-20 00:00:00,4,2060)

(1,Ramesh,32,Ahmedabad,2000,101,2009-11-20 00:00:00,2,1560)

(1,Ramesh,32,Ahmedabad,2000,100,2009-10-08 00:00:00,3,1500)

(1,Ramesh,32,Ahmedabad,2000,102,2009-10-08 00:00:00,3,3000)

Example 2:

Step 1 - Change the directory to /usr/local/pig/bin

$ cd /usr/local/pig/bin

Step 2 - Enter into grunt shell in MapReduce mode.

$ ./pig -x mapreduce

Step 3 - Create a customers.txt file.

customers.txt

Step 4 - Add these following lines to customers.txt file.

1,Ramesh,32,Ahmedabad,2000.00

2,Khilan,25,Delhi,1500.00

3,kaushik,23,Kota,2000.00

4,Chaitali,25,Mumbai,6500.00

5,Hardik,27,Bhopal,8500.00

6,Komal,22,MP,4500.00

7,Muffy,24,Indore,10000.00

Step 5 - Create a orders.txt file.

orders.txt

Step 6 - Add these following lines to orders.txt file.

102,2009-10-08 00:00:00,3,3000

100,2009-10-08 00:00:00,3,1500

101,2009-11-20 00:00:00,2,1560

103,2008-05-20 00:00:00,4,2060

Step 7 - Copy customers.txt and orders.txt from local file system to HDFS. In my case, the customers.txt and orders.txt file are stored in /home/hduser/Desktop/PIG/ directory.

$ hdfs dfs -copyFromLocal /home/hduser/Desktop/PIG/customers.txt /user/hduser/pig/

$ hdfs dfs -copyFromLocal /home/hduser/Desktop/PIG/orders.txt /user/hduser/pig/

Step 8 - Load customers data.

customers = LOAD 'hdfs://localhost:9000/user/hduser/pig/customers.txt' USING

PigStorage(',')as (id:int, name:chararray, age:int, address:chararray,

salary:int);

Step 9 - Load orders data.

orders = LOAD 'hdfs://localhost:9000/user/hduser/pig/orders.txt' USING

PigStorage(',')as (oid:int, date:chararray, customer_id:int, amount:int);

Step 10 - Cross data.

cross_data = CROSS customers, orders;

Dump cross_data;