pig tutorial - apache pig tutorial - Apache Pig Storing Data - pig latin - apache pig - pig hadoop

What is data storing?

- You can store the loaded data in the file system using the store operator.

- A data store is a repository for persistently storing and managing collections of data which include not just repositories like databases, but also simpler store types such as simple files, emails etc.

- Thus, any database or file is a series of bytes that, once stored, is called a data store.

- Stores the relation into the local FS or HDFS (usually!)

- Useful for debugging

Syntax of the Store statement

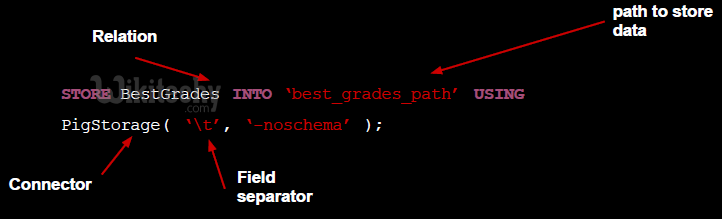

STORE Relation_name INTO ' required_directory_path ' [USING function];

Example:

- Assume we have a file student_data.txt in HDFS with the following content.

001, Aadhira,Arushi ,9848022337, Delhi

002, Mahi,Champa,9848022338, Chennai

003, Avantika,charu,9848022339, Pune

004, Samaira,Hansa,9848022330, Kolkata

005, Abhinav,Akaash,9848022336,Bhuwaneshwar

006, Amarjeet,Aksat,9848022335, Hyderabad

- And we have read it into a relation student using the LOAD operator as shown below.

grunt> student = LOAD 'hdfs://localhost:9000/pig_data/student_data.txt'

USING PigStorage(',')

as ( id:int, firstname:chararray, lastname:chararray, phone:chararray,

city:chararray );

- Now, let us store the relation in the HDFS directory “/pig-Output/” as shown below.

grunt> STORE student INTO ' hdfs://localhost:9000/pig_Output/ ' USING PigStorage (',');

Output:

- After executing the store statement, you will get the following output.

- A directory is created with the specified name and the data will be stored in it.

2015-10-05 13:05:05,429 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.

MapReduceLau ncher - 100% complete

2015-10-05 13:05:05,429 [main] INFO org.apache.pig.tools.pigstats.mapreduce.SimplePigStats -

Script Statistics:

HadoopVersion PigVersion UserId StartedAt FinishedAt Features

2.6.0 0.15.0 Hadoop 2015-10-0 13:03:03 2015-10-05 13:05:05 UNKNOWN

Success!

Job Stats (time in seconds):

JobId Maps Reduces MaxMapTime MinMapTime AvgMapTime MedianMapTime

job_14459_06 1 0 n/a n/a n/a n/a

MaxReduceTime MinReduceTime AvgReduceTime MedianReducetime Alias Feature

0 0 0 0 student MAP_ONLY

OutPut folder

hdfs://localhost:9000/pig-Output/

Input(s): Successfully read 0 records from: "hdfs://localhost:9000/pig_data/student_data.txt"

Output(s): Successfully stored 0 records in: "hdfs://localhost:9000/pig_Output"

Counters:

Total records written : 0

Total bytes written : 0

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG: job_1443519499159_0006

2015-10-05 13:06:06,192 [main] INFO org.apache.pig.backend.hadoop.executionengine

.mapReduceLayer.MapReduceLau ncher - Success!

Verification:

- Verify the stored data as shown below.

Step 1:

- First of all, list out the files in the directory named pig_output using the ls command as shown below.

hdfs dfs -ls 'hdfs://localhost:9000/pig_Output/'

Found 2 items

rw-r--r- 1 Hadoop supergroup 0 2015-10-05 13:03 hdfs://localhost:9000/pig_Output/_SUCCESS

rw-r--r- 1 Hadoop supergroup 224 2015-10-05 13:03 hdfs://localhost:9000/pig_Output/part-m-00000

- You can observe that two files were created after executing the store statement.

Step 2:

- Using cat command, list the contents of the file named part-m-00000 as shown below.

$ hdfs dfs -cat 'hdfs://localhost:9000/pig_Output/part-m-00000'

001, Aadhira,Arushi ,9848022337, Delhi

002, Mahi,Champa,9848022338, Chennai

003, Avantika,charu,9848022339, Pune

004, Samaira,Hansa,9848022330, Kolkata

005, Abhinav,Akaash,9848022336,Bhuwaneshwar

006, Amarjeet,Aksat,9848022335, Hyderabad

Using PigStorage:

- Delimiter

- Limitations

- PigStorage is a built-in function of Pig, and one of the most common functions used to load and store data in pigscripts.

- PigStorage can be used to parse text data with an arbitrary delimiter, or to output data in an delimited format.

Delimiter:

- If no argument is provided, PigStorage will assume tab-delimited format.

- If a delimiter argument is provided, it must be a single-byte character; any literal (eg: 'a', '|'), known escape character (eg: '\t', '\r') is a valid delimiter.

Example:

data = LOAD 's3n://input-bucket/input-folder' USING PigStorage(' ')

AS (field0:chararray, field1:int);

- The schema must be provided in the AS clause.

- To store data using PigStorage, the same delimiter rules apply:

STORE data INTO 's3n://output-bucket/output-folder' USING PigStorage('\t');Limitations:

- PigStorage is an extremely simple loader that does not handle special cases such as embedded delimiters or escaped control characters; it will split on every instance of the delimiter regardless of context.

- For this reason, when loading a CSV file it is recommended to use CSVExcelStorage <http://help.mortardata.com/integrations/amazon_s3/csv> rather than PigStorage with a comma delimiter.