[Solved-2 Solutions] Pig Udf in displaying result ?

What is udf

- Pig provides extensive support for user defined functions (UDFs) as a way to specify custom processing.

- Pig UDFs can currently be implemented in three languages: Java, Python, and JavaScript. The most extensive support is provided for Java functions

Problem:

If you have written an udf in java and you have included a System.out.println statement in it. If you have to know where this statement gets printed while running in pig.

Solution 1:

- Assuming your UDF extends EvalFunc, We can use the Logger returned from

EvalFunc.getLogger()- The log output should be visible in the associated Map / Reduce task that pig executes

- The logs will end up in the Map Reduce Task log file. Debugging your UDF in local mode before deploying on a cluster.

- By default errors (e.g: script parsing errors) are logged to pig.logfile which can be set in

$PIG_HOME/conf/pig.propertiesIf you want to log status messages too, then prepare a valid log4j.properties file and set it in the log4jconf property.

- When using Pig v0.10.0 (r1328203)- Pig task doesn't write the job's history logs to the output directory on hdfs.

- If we want to have these histories by all means then set mapred.output.dir in your pig script in this way:

set mapred.output.dir '/user/hadoop/test/output';

Here is a sample usage with sample log4j.properties example.

option -l is used to to name the log file t

pig -l /tmp/some.pig.log -4 log4j.properties -x local mysample.pig cat log4j.properties

# Root logger option

log4j.rootLogger=INFO, file, F

# Direct log messages to a log file

log4j.logger.org.apache.pig=DEBUG

log4j.logger.org.apache.hadoop=INFO

log4j.appender.file=org.apache.log4j.RollingFileAppender

log4j.appender.file.File=${pig.logfile}

log4j.appender.file.MaxFileSize=1MB

log4j.appender.file.MaxBackupIndex=1

log4j.appender.file.layout=org.apache.log4j.PatternLayout

#log4j.appender.file.layout.ConversionPattern=%d{ABSOLUTE} %5p %c{1}:%L - %m%n

log4j.appender.file.layout.ConversionPattern=%d{ABSOLUTE} %5p [%t] (%F:%L) - %m%n

- The output of the above pig command is stored in the file /tmp/some.pig.log in a typical apache log4j format.

Solution 2:

- Open it in browser, when you try to open it, it will fail to open as the ip is local one. Say we are using an EMR cluster then get the public it in

Master public DNS:ec2-52-89-98-140.us-west-2.compute.amazonaws.com

Now replace the public ip in the url above to change it to

ec2-52-89-98-140.us-west-2.compute.amazonaws.com:20888/proxy/application_1443585172695_0019/

After executing this you will notice that the url has changed

Some private ip then job history server

http://ip-172-31-29-193.us-west-2.compute.internal:19888/jobhistory/job/job_1443585172695_0019/

Again replace the private ip

ec2-52-89-98-140.us-west-2.compute.amazonaws.com:19888/jobhistory/job/job_1443585172695_0019/

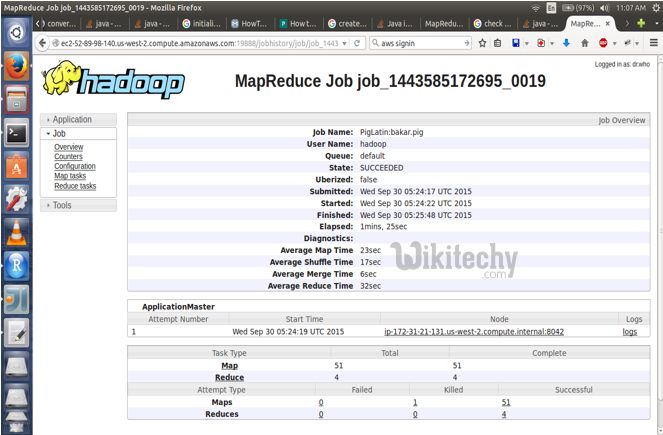

By now we should come to this page

Learn Apache pig - Apache pig tutorial - Hadoop mapreduce - Apache pig examples - Apache pig programs

- Now determine whether your task(The point where UDF is called) is executed in mapper or reducer phase(before or after groupby) and click on the links

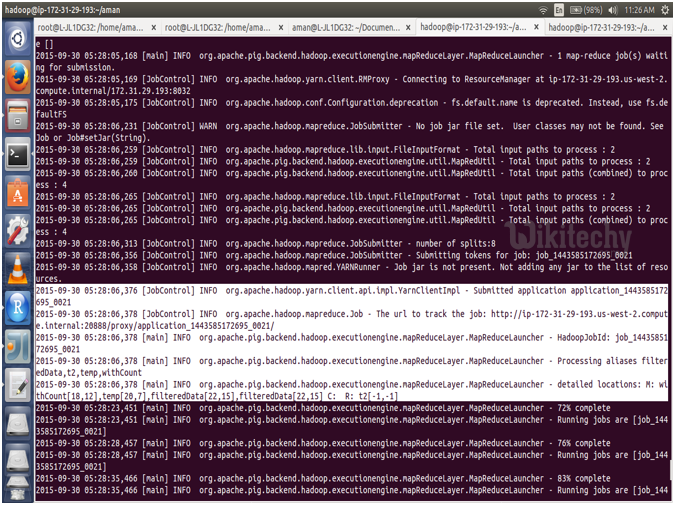

- Now go to the terminal where the logs are there. And find the step where your variable is computed and get the jobid from there

Learn Apache pig - Apache pig tutorial - Hadoop - Apache pig examples - Apache pig programs

- my jobid is

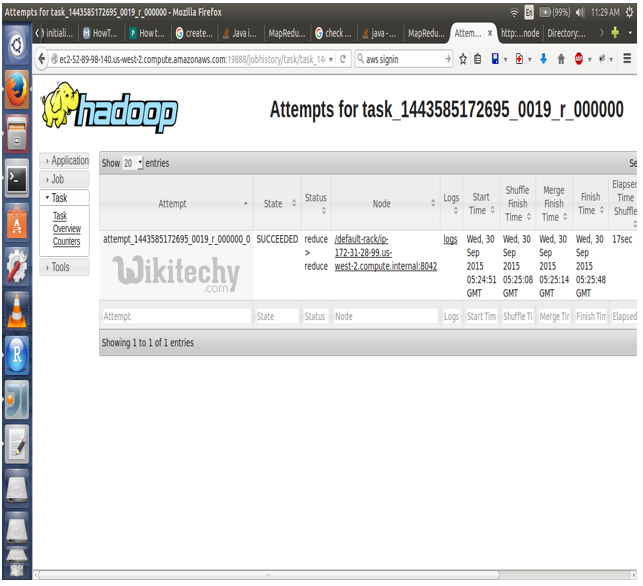

job_1443585172695_0021 - Now in the previous step lets say your variable lies in reduce phase click on that and you will get screen similar

Learn Apache pig - Apache pig tutorial - Pig UDF Displaying Result - Apache pig examples - Apache pig programs

- Get the private IP from there which is

172-31-28-99for my case. - Now go to the EMR page

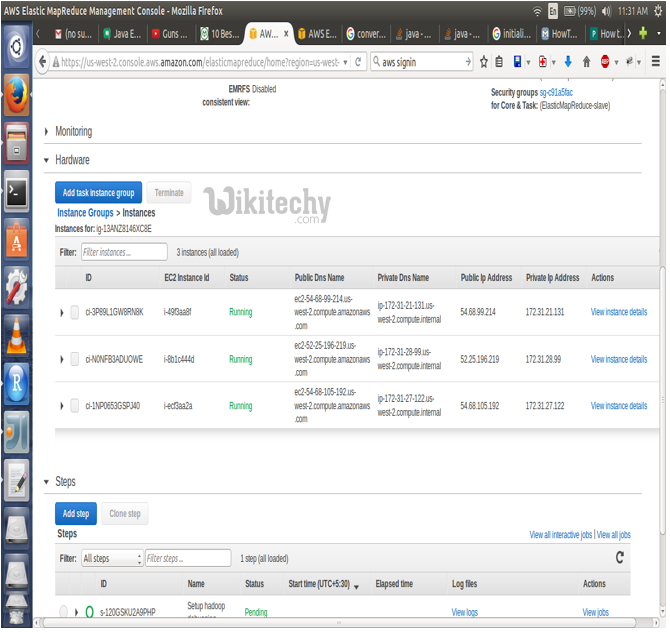

CLICK ON HARDWARE INSTANCES AND CLICK ON VIEW EC2 INSTANCES

- You will get something similar to

Learn Apache pig - Apache pig tutorial - Pig User Defined Functions - Apache pig examples - Apache pig programs

- Now get the public ip corresponding to the private IP in my case it is

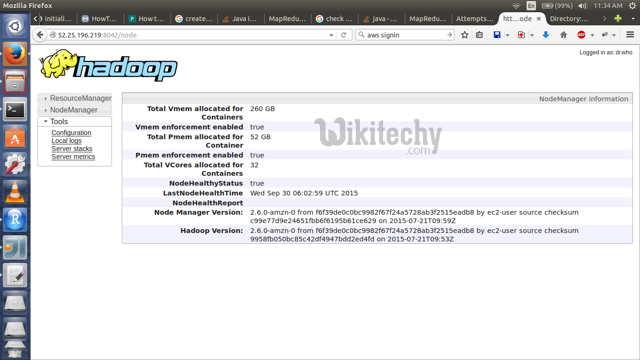

52.25.196.219 - Now open the url publicip:

8042 - ie

52.25.196.219:8042to get something similar to

Learn Apache pig - Apache pig tutorial - Executing Pig UDF - Apache pig examples - Apache pig programs

Click on tool in the left side and then click local logs.

Wait a little longer almost there.

We will get another page now nagivate

click on Container --> YOUR JOB ID (which we found in image 2)(in my case it was application_1443585172695_0021/ 4096 bytes Sep 30, 2015 5:28:53 AM) ---> then there would be many files with container as prefix, open one and you will find stdout Directory open it to see the system.out.println message.

Couple of things to remember 1) Test UDF on local machine 2) Learn Unit test cases helps a lot in debugging

Above 2 things will save all the trouble of finding the logs